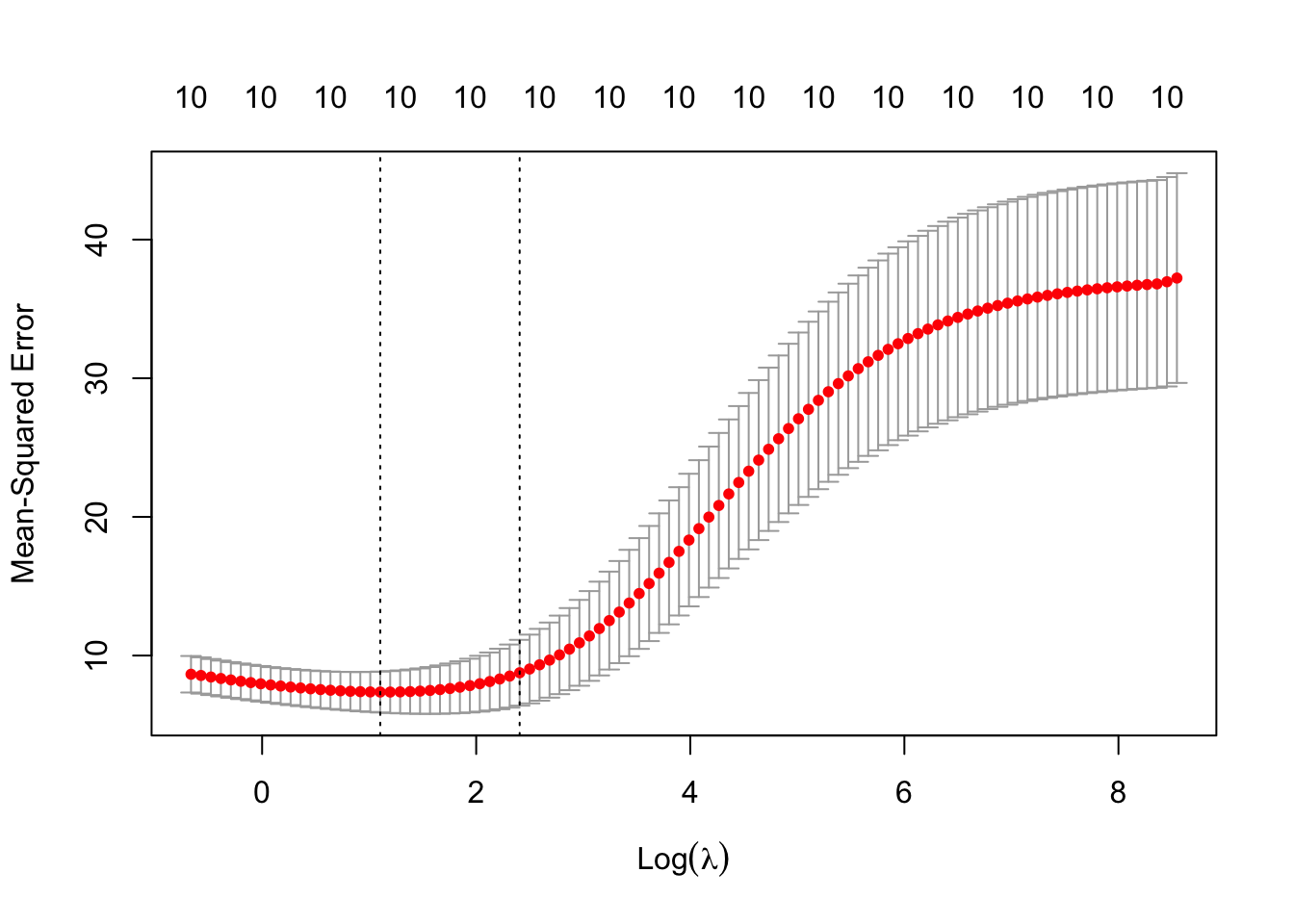

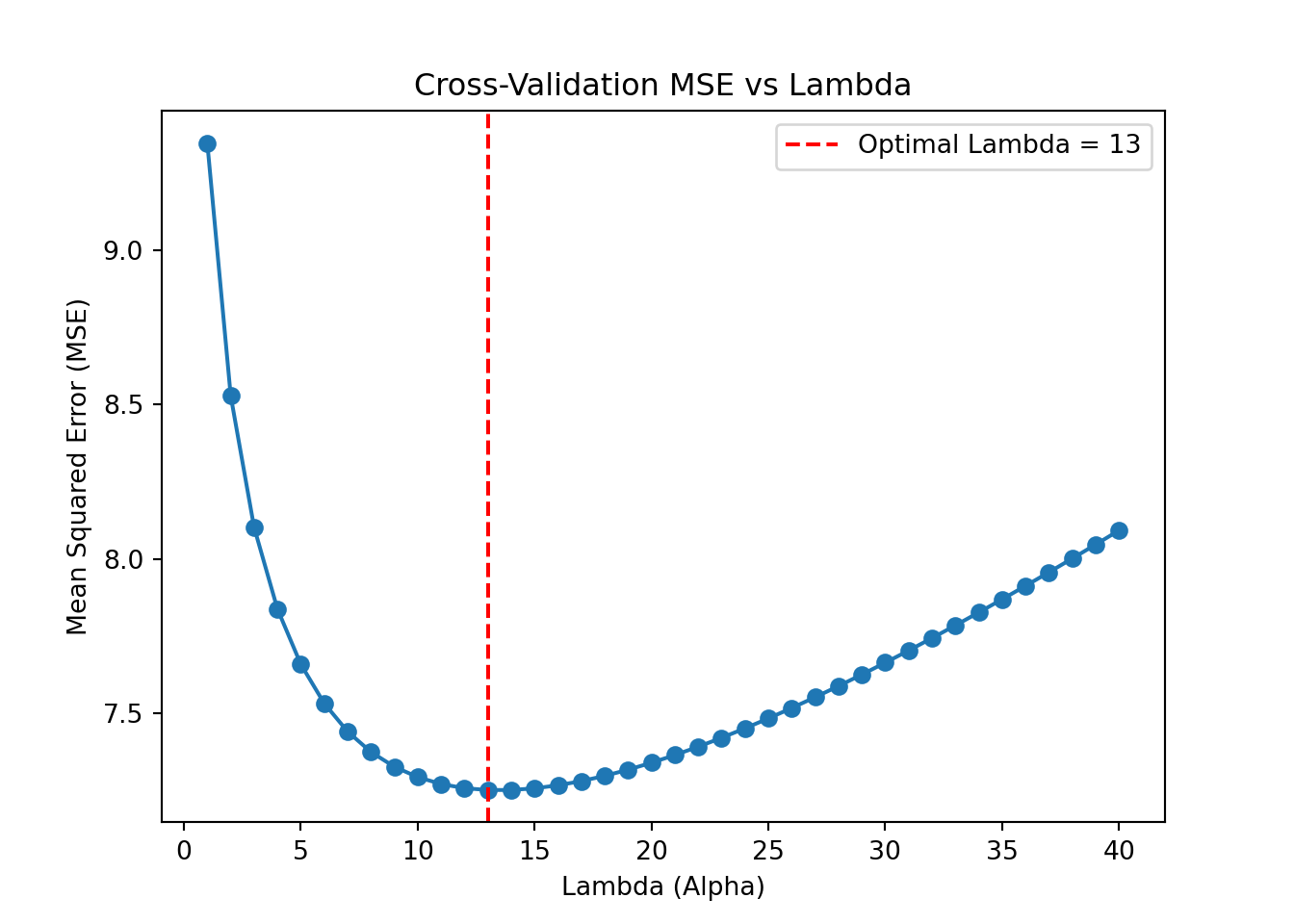

RidgeCV(alphas=array([5.00000000e-01, 5.48749383e-01, 6.02251770e-01, 6.60970574e-01,

7.25414389e-01, 7.96141397e-01, 8.73764200e-01, 9.58955131e-01,

1.05245207e+00, 1.15506485e+00, 1.26768225e+00, 1.39127970e+00,

1.52692775e+00, 1.67580133e+00, 1.83918989e+00, 2.01850863e+00,

2.21531073e+00, 2.43130079e+00, 2.66834962e+00, 2.92851041e+00,

3.21403656e+00, 3.52740116e+0...

5.88405976e+02, 6.45774833e+02, 7.08737081e+02, 7.77838072e+02,

8.53676324e+02, 9.36908711e+02, 1.02825615e+03, 1.12850986e+03,

1.23853818e+03, 1.35929412e+03, 1.49182362e+03, 1.63727458e+03,

1.79690683e+03, 1.97210303e+03, 2.16438064e+03, 2.37540508e+03,

2.60700414e+03, 2.86118383e+03, 3.14014572e+03, 3.44630605e+03,

3.78231664e+03, 4.15108784e+03, 4.55581378e+03, 5.00000000e+03]),

cv=10, scoring='neg_mean_squared_error')

In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.